Managing AI in the enterprise is a team sport. In this article, I want to explore specifically what ITAM brings to the table as we enter the AI era.

As I’ve mentioned in previous articles on this topic, I believe culturally we need to avoid being the governance department of “No” and work with the business to help it innovate, with clear guardrails that let teams innovate without unnecessary risk.

We also want to execute our ideas for AI governance without creating yet another system or another set of spreadsheets. It can be an evolution of existing systems.

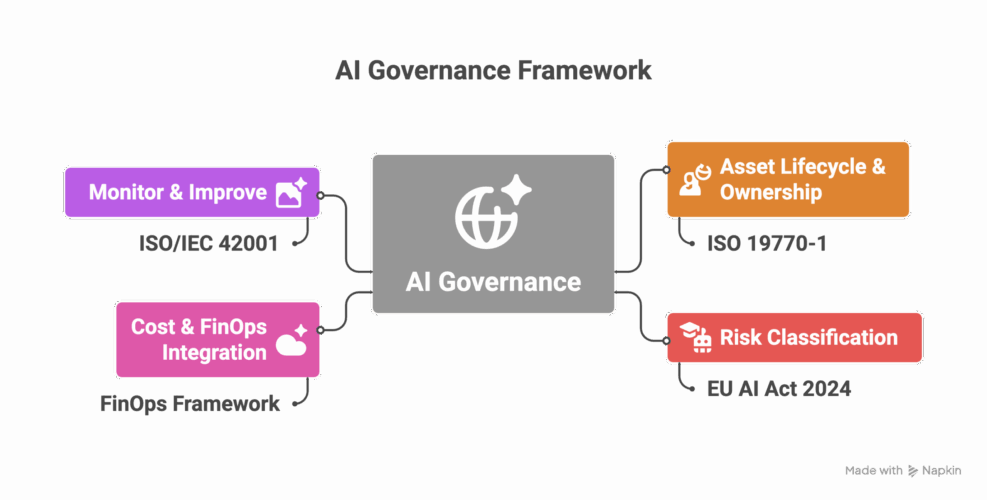

AI governance isn’t a net new problem. But rather an evolution and intersection of existing disciplines.

- Executive leadership can lean upon the AI standard ISO/IEC 42001

- ITIL/DevOps/ITSM cover the delivery of AI systems

- ITAM best practice and ISO/IEC 19770-1 for managing IT assets throughout their lifecycle

- The EU AI Act 2024 is proving a yardstick for AI regulation

- FinOps for linking investment in AI to scale and value delivered back to the business.

AI is an asset status, not an asset

Think about a perfectly ordinary laptop ticking along for years. When it enters disposal, its status changes and suddenly a raft of environmental and governance obligations apply. The risk isn’t in the metal and silicon, it’s in the status change.

I believe AI works the same way. You might have a standard stack, data, platforms, and services. Flip the status to “this thing now uses AI in a certain way,” and you inherit new obligations and risks, potentially regulatory ones. In the same way, a status changes when an asset has certain data on it. So the job isn’t just to “find AI”; it’s to understand the context of usage and where a change in AI status may elevate risk. Plenty of AI will be benign and require minimal intervention. The trick is knowing which is which.

AI status (my working definition): a flag that a system’s behaviour invokes AI in a way that changes its risk, cost, compliance, or assurance posture.

Four practical work streams

If we lean on existing frameworks, the work naturally falls into four areas:

1. Asset lifecycle and ownership

What have we got, where is it, how is it configured, and who owns it? None of this is new to ITAM. Public cloud, SaaS, on-prem: same fundamentals, different wrappers. What is new is watching for Shadow or Grey AI (e.g. I use my own version of ChatGPT and upload company documents to it to be more productive) or TrojanA – AI capabilities entering into products or services and enabled without scrutiny (e.g. embedded within existing products via upgrades or unknowingly pinging AI-based services via API calls).

2. Identification and risk classification

Once the AI status is in play, classify the risk. The EU AI Act is a useful watermark even if you’re not in the EU, because it points to what the market will expect. If you’re scanning private data or touching civil rights, you’re squarely in higher-risk territory. Build a simple internal rating so teams know what they’re dealing with.

3. Cost, value, and FinOps integration

Tie investment to business performance. If an AI workload is burning cash, FinOps should show the line from spend to value, just as with any other cloud service.

4. Monitor, learn, and improve (feed the management system)

Close the loop. What did we learn? What needs tightening? This is where everything feeds back into your management system (e.g., 42001) so improvements stick.

Where AI shows up (and what to watch)

- SaaS: two questions regarding SaaS, a) who is using SaaS based AI in my organisation and b) are they sharing company confidential information with it. This is not a new challenge for SaaS management; we’ve asked those questions for years, but generative AI has blown the surface area wide open. Inputs (prompts, customer data, internal know-how) and outputs (generated content, derived IP) both carry obligations. It’s not about creating a new spreadsheet; it’s about making sure our existing SaaS hygiene now recognises AI status and treats those flows accordingly. The same techniques to track SaaS (browser extensions, port traffic, SSO, etc) can help identify AI.

- Public cloud: the controls aren’t new; your infosec and FinOps muscles already exist. The difference is explicitly tagging AI status so you can separate benign workloads from those that need extra scrutiny. AI regulation, boiled down to first principles, is the protection of the protected characteristics. What are you using to track that now? How could that be extended to AI? Cost to value, risk to control: the same loop, just more intelligent tagging and more precise classification.

- On premise, the story is similar. If a workload remains ordinary, keep calm and carry on; when that workload starts using AI in a meaningful way, attach the status, classify the risk, and apply the appropriate guardrails.

- Then there’s the vendor dimension. More suppliers are baking AI into their platforms, often as a toggle or feature set that can appear mid-contract. Tooling should help customers see where models and AI features are in use and assist with classification. No one is going to maintain a hand-rolled inventory of “all AI in the estate.” We need automation.

Managing this at scale

ITAM tools need to help identify this asset change and the data involved if they are to remain relevant over the next 5-10 years. This requires existing ITAM tools to be more CMDB-like, managing relationships and connectivity beyond the asset itself. NIST refers to this as ‘Mapping’ – “context is recognised and risk related to context are identified”. The EU AI Act 2024 also refers to “context” of use.

This points to another existing convention rather than starting from scratch, including all AI components within systems in an open register. An AI bill (AIBOM) of materials so that automated systems can pick it up and identify it at scale. Easily identifiable, easily traceable, and immediately recognisable when they enter the organisation. That’s how we stop playing whack-a-mole and start treating AI as a transparent, governable status within the asset estate. Further reading on AIBOM.

Summary -AI is an asset status, not an asset

By treating AI as a lifecycle flag rather than an entirely new asset class, ITAM professionals can leverage existing frameworks, tools, and disciplines to manage risk, cost, and compliance at scale.

This isn’t about building a new governance machine. It’s about adding an AI-status signal to the machines we already run, and then doing four things well:

Tag >> Classify >> Tie spend to value >> Improve.

Do that consistently, and you’ll give the business the space to innovate without drowning everyone in admin.