Chatbot Turbulence: Air Canada and its Misguided AI Autopilot

Air Canada recently learned that AI chatbots cannot always be trusted…

There is a lot of talk about AI within ITAM and how it can help streamline processes, save human time, improve accuracy, and reduce errors and conflicts. Many of the potential uses being imagined, theorised, and discussed involve contracts in scenarios such as:

- Reading contracts for potential problems

- Providing summaries of key points from contracts

- Interpreting contractual repositories (such as Microsoft Product Terms) to determine (non)compliance

- Chatbots to summarise and provide information

All presented as ways to make things easier, clearer, and faster.

I’m a big fan of AI and see many benefits, both now and in the future, but I am also hesitant about how quickly it should be implemented, adopted, and relied upon. It’s known that AI is far from infallible and the vendors acknowledge this:

We have spoken before about the need to check and verify data produced by AI tools in ITAM, particularly when there may be millions of £/$/€ at stake. However, if everything has to be checked and verified that may negate much of the benefit offered by using AI and, perhaps most importantly, human users will not also do that before relying on the data. Some may see that as “user error” but, realistically, it’s human nature and isn’t unusual.

This leads me to a recent situation in Canada which involved a user, a chatbot, some information, and a lawsuit.

What happened with Air Canada’s AI chatbot?

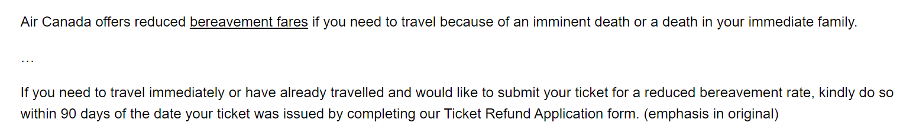

A customer, Mr Moffat, needed information about Air Canada’s bereavement travel policy so headed to their website where he was engaged by the Air Canada chatbot. Asking his question, he received the response:

Telling him he had 90 days from booking his ticket to claim a refund for the reduced bereavement rate.

When he contacted Air Canada to process the almost $900 refund, he was unsuccessful as the actual policy is that “bereavement policy does not apply to requests for bereavement consideration after travel has been completed”. This policy is displayed on a webpage entitled “Bereavement travel” which is linked to from the underlined text in the chatbot’s reply.

An Air Canada representative admitted that the Air Canada AI chatbot had used “misleading words” but the company claimed that “it cannot be held liable for information provided by one of its agents, servants, or representatives – including a chatbot”.

The Civil Resolution Tribunal (CRT) found Air Canada guilty of “negligent misrepresentation” where “a seller does not exercise reasonable care to ensure its representations are accurate and not misleading” and they make some good points in the judgment:

- It should be obvious to Air Canada that it is responsible for all the information on its website. It makes no difference whether the information comes from a static page or a chatbot

- Air Canada does not explain why the webpage titled “Bereavement travel” was inherently more trustworthy than its chatbot

- Air Canada does not explain why customers should have to double-check information found in one part of its website on another part of its website

- There is no reason why Mr. Moffatt should know that one section of Air Canada’s webpage is accurate, and another is not

The complaint was successful and Air Canada were ordered to pay $812.02 to Mr Moffat to cover damages, interest, and fees.

It is to be noted that Air Canada did.

ITAM parallels

It’s clear to see the similarities here with potential ITAM and licensing scenarios such as:

- An ITAM manager uses data provided by a SAM tool chatbot in a renewal/negotiation

- Data provided by a 3rd-party tool chatbot that reads contracts and terms is used to prepare for a purchase

- An internal user bases a system architecture decision on licensing/contract information provided by an internal (or 3rd-party) chatbot

Imagine then, in each case, that the organisation is found to be non-compliant at a later date and no doubt for amounts surely larger than $800!

From reading the Tribunal information (here) it appears that Air Canada took a relatively lackadaisical approach to this case – likely due to the low sum of money involved. In a software non-compliance scenario involving an aggressive auditor such as Quest or Oracle, one would have to assume that contracts would be provided, clauses argued, and positions strenuously defended. That will serve to make it a more costly and time intensive endeavours, perhaps with a lower chance of success too.

Finally – if there’s the risk that chatbots will provide incorrect information which may cost you 100s of hours of time and millions of £$€…will there be any real use for AI chatbots within/between organisations?

ChatGPT loses the plot

As a further cautionary tale, ChatGPT has recently started to generate nonsensical replies to users – many of which have been shared on Reddit here. One example, when asked “What is a computer” part of its reply was:

While these hallucinations are easy to spot, there is always the chance that something more subtle – like the Air Canada AI example – will appear within a chatbot’s response. The moral of the story? Be very, very careful if using any AI chatbot or assistant when making high-stakes decisions.

Can’t find what you’re looking for?

More from ITAM News & Analysis

-

ITAMantics - April 2024

Welcome to the April 2024 edition of ITAMantics, our monthly news podcast where we discuss the biggest ITAM stories from the last month. George is joined this month by AJ Witt and Ryan Stefani. Stories tackled ... -

Broadcom is removing expired VMware licences from its portal - take action now!

Hot on the heels of Broadcom’s announcement of the end of perpetual licences for VMware it has given customers barely a week to download any keys for licenses from its portal with expired support. This is ... -

Who Loses When Broadcom Wins?

News of a new Broadcom deal rarely arrives with great fanfare. The November 2023 VMware acquisition provoked open worry online and in business circles, with many critics wondering whether the former Hewlett-Packard spinoff’s reputation would prove ...